Questions in Search of Answers

If Facebook can develop software to suppress real news in China, why can’t it deploy software to suppress fake news in America? Facebook has been much in the news lately – facing accusations ranging from influencing the presidential election to “contributing to the balkanization of America” by “[narrowing] our sight-lines and reinforcing what we already believe” to cozying up to Chinese officials to gain access to the enormous Chinese market – all the while denying any journalistic role, despite the fact that it is a source of news for 44 percent of Americans.

“I am confident we can find ways for our community to tell us what content is most meaningful,” Facebook founder Mark Zuckerberg said recently, “but I believe we must be extremely cautious about becoming arbiters of truth ourselves.”

The more I read his statement, the less I understand it. It’s a kind of Rorschach test, open to our own interpretation. And therein lies the danger – for while I certainly don’t want Facebook’s engineers becoming the arbiters of truth, I wonder exactly what community is defining the “most meaningful” content.

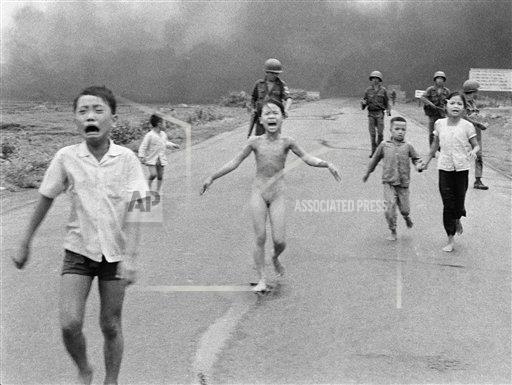

It’s not an idle question. Facebook recently removed (and then reinstated under pressure) Nick Ut’s The Terror of War, the most affecting and important photo of the Vietnam War, because "it's difficult to

create a distinction between allowing a photograph of a nude child in one instance and not others.”

Is Guernica next?

So maybe a more important question than the one which opened this post is: Should we be asking Facebook to suppress anything – particularly if it can't or won't distinguish between child pornography and The Terror of War? Doesn't it have enough power already? Then where does that leave us in the discussion of fake news and the First Amendment?